Best Gfx Card For Mac

Earlier this week Razer, best known for its PC Laptops and gaming-related peripherals, solution called the. Razer already produces an eGPU called the Razer Core V2, but the Core X is a budget-friendly eGPU box that’s being marketed at both Mac and PC users. Compared to the Core V2, the Core X is a more straight-forward solution, eschewing niceties like USB, Ethernet, and RGB lighting in favor of a less expensive, more corporate-looking external graphics solution.

Is the Razer Core X a good solution for Mac users in search of an eGPU? How does it stack up to the current crop of Mac-compatible external graphics boxes on the market? Watch our hands-on video walkthrough for the details.

Joel Hruska The Best Graphics Cards of 2018 Our guide shows you what to look for when buying or upgrading your video card, including side-by-side spec comparisons and the latest reviews of the top-rated GPUs. The Importance of Graphics Power Whether you're looking to upgrade an existing desktop, build a new one from scratch, or choose a laptop that fits your needs and budget, the graphics solution you choose could have a significant impact on your overall experience. PC makers often de-emphasize graphics cards in favor of promoting CPU, RAM, or storage options. While all of these components are important, having the right graphics card (often dubbed 'GPU') matters as well, and this guide will help you pick the best options for your desktop PC. A modern GPU, whether discrete or integrated, handles the display of 2D and 3D content, drawing the desktop, and decoding and encoding video content. In this guide, we'll discuss how to evaluate GPUs, what you need to know to upgrade an existing system, and how to evaluate whether or not a particular card is a good buy. We'll also touch on some upcoming trends and how they could affect which card you choose.

Starting With the Basics All of the discrete GPUs on the market are built around graphics processors designed by one of two companies: AMD or Nvidia. Their GPU designs are sold by a wide range of resellers, some of whom also release their own custom products with different cooler designs, slight overclocking from the factory, or features such as LED lighting. For a while from 2016 to mid-2017, Nvidia had the upper end of the performance market completely to itself, but now that AMD's Radeon RX Vega cards are on sale, you're no longer limited to Nvidia if you're spending $300 or more on a GPU. GPUs from these two companies are typically grouped into families of graphics processors, all of which share some common naming conventions. For the past eight years, Nvidia has followed a common format of 'prefix—model number—suffix.'

If two GPUs have the same model number, such as the GeForce GTX 750 and the GTX 750 Ti, the suffix 'Ti' denotes the higher-end part. Nvidia has also been known to use an 'X' or 'Xp' to denote certain extremely high-end parts.

AMD's nomenclature is similar, with a prefix 'RX,' three-digit model number, and, at times, a suffix (typically XT or XTX). Be advised, however, that these are rules of thumb rather than absolutes.

There a few key metrics to keep in mind when comparing video cards: engine clock speed, core count, onboard VRAM (memory), memory bandwidth, memory clock, and, of course, pricing. Engine Clock Speed and Core Count. When comparing GPUs from the same family, you can generally assume that higher clock speed (the speed that the core works at) and more cores mean a faster GPU. Unfortunately, you can only rely on clock speed and core count to compare GPUs when you're comparing cards in the same product family. AMD GPUs, for example, tend to contain more cores than Nvidia GPUs at the same price point.

Onboard VRAM, Memory Bandwidth, and Memory Clock These features follow much the same trend as clock speed and core count (the more the better), but with one important difference: Manufacturers often add far more memory to a GPU than it can plausibly use and market it as a more powerful solution. As you scale down the overall capability of a GPU, you scale down the detail levels and resolutions it can run while maintaining a playable frame rate. Moving from a 2GB card to a 4GB card is a great idea if you know your GPU is running out of RAM, but it won't do anything if your GPU is too weak to run a game at your chosen detail settings. If you're looking to spend $150 or more, 4GB really shouldn't be negotiable.

Both AMD and Nvidia now outfit their $200-plus GPUs with more RAM than this (AMD has stepped up to 8GB, while Nvidia is using 6GB). Either way, sub-4GB cards should only be used for secondary systems, low resolutions, or simple or older games that don't need much in the way of hardware resources. Memory bandwidth refers to how quickly data can move into and out of the GPU. More is generally better, but again, AMD and Nvidia have different architectures and sometimes different memory bandwidth requirements, so numbers are not directly comparable. There are two ways to change a given GPU's memory bandwidth.

You can do it by changing the bus size (i.e., how much data can be transferred per clock cycle) or by changing the memory clock speed. As for clock speed, the faster RAM is clocked, all else being equal, the higher the available memory bandwidth will be. How Much Should You Spend? AMD and Nvidia are targeting light 1080p gaming at the $100 to $150 price point, higher-end 1080p and entry-level 1440p at the $200 to $300 price point, and light to high-detail 1440p gaming between $300 and $400.

If you want a GPU that can handle 4K handily, you'll need to spend more than $400. A GPU that can handle 4K at high detail levels will cost $500 to $1,000. Cards in the $150 to $350 market generally offer performance improvements in line with their additional cost. If GPU A is 20 percent more expensive than GPU B, GPU A will also likely be 15 to 25 percent faster than GPU B. As price increases, this rule applies less; spending more money yields dimishing returns. Discrete and Integrated Graphics Cards. GPUs come in two flavors: discrete and integrated.

An integrated GPU is built on the same silicon as the CPU, a configuration that is useful for thin, lightweight laptops and very small desktops. They are also commonly seen in budget systems, as combining the CPU and GPU results in a lower cost. Integrated graphics solutions have become more powerful in recent years, but they are still generally limited to supporting older games and lower resolutions. In desktops, a discrete GPU occupies its own x16 PCI Express slot on the motherboard. One of the advantages of a desktop for gaming is that graphics cards are essentially modular; if your motherboard has an x16 PCI Express slot, it can run some kind of modern GPU.

In laptops, a discrete GPU also occupies its own slot within the laptop, but it typically can't be upgraded by the end user. Assume that whatever discrete GPU comes with your laptop is the one you'll be using throughout your PC's life. Who Needs to Buy a Discrete GPU?

As CPUs have advanced, they've incorporated full-fledged GPUs into their designs. AMD refers to these CPU/GPU combination chips as Accelerated Processing Units (APUs), while Intel just calls them CPUs with Intel HD Graphics. (Intel uses additional labels, like Iris Pro or Iris Plus, to denote models with separate graphics caches.) Either way, integrated graphics are fully capable of meeting the needs of most general users today, with three broad exceptions. Professional Workstation Users. These folks, who work with CAD software or in video and photo editing, will still benefit greatly from a discrete GPU.

There are also applications that can transcode video from one format to another using the GPU instead of the CPU, though whether this is faster will depend on the application in question, which GPU and CPU you own, and the encoding specifications you target. Productivity-Minded Users With Multiple Displays.

People who need a large number of displays can also benefit from a discrete GPU. Desktop operating systems are capable of driving displays connected to the integrated and discrete GPUs simultaneously. If you've ever wanted five or six displays hooked up to a single system, you can combine an integrated and discrete GPU to get there.

And of course, there's the gaming market, to whom the GPU is arguably the most important component. RAM and CPU choices both matter, but if you have to pick between a top-end system circa 2014 with a 2018 GPU or a top-end system today using the highest-end GPU you could buy in 2014, you'd want the former. Pre-Built Laptops and GPUs Since applications like Word and Excel work fine with an integrated GPU, many ultrathin don't even offer discrete GPUs as an option anymore, particularly those that focus on minimal power consumption and maximized battery life. In theory, a laptop user who wants to hook up a large number of can benefit from having a discrete GPU, but this depends on the manufacturer offering multiple display outputs that are connected to both the integrated GPU and the discrete graphics chip within the system. Don't assume that just because a laptop has two video-out ports that they're connected to different hardware internally. The other major considerations when evaluating a laptop GPU are heat and noise. The smaller the laptop, the louder the fan noise will be to cool the GPU.

Os x 0.9 download for mac. 5 days ago iShredder Mac 2.0.9 - Military grade deletion. Download the latest versions of the best Mac apps at safe and trusted MacUpdate Download, install, or update iShredder Mac for Mac. OSXII 0.9 is a third party application that provides additional functionality to OS X system and enjoys a popularity among Mac users. However, instead of installing it by dragging its icon to the Application folder, uninstalling OSXII 0.9 may need you to do more than a simple drag-and-drop to the Trash.

A 17-inch might be very quiet while running a game with Nvidia's GeForce GTX 1060, while a 13-inch laptop with the same GPU likely will be much noisier due to the reduction in internal cooling volume. A cooling pad will limit that noise somewhat, as will reducing frame rates and using lower-quality graphics settings for your games. Pre-Built Desktops and GPUs.

Generally speaking, have less throttling or noise issues than laptops. Most pre-built desktops (OEM or boutique) these days have enough cooling capability to handle a discrete GPU or GPU upgrade with no problems. The first thing to do before buying or upgrading a GPU is to measure the inside of your chassis for the available card space. In some cases, you've got a gulf between the far right-hand edge of the motherboard and the hard drive bays. In others, you might have barely an inch.

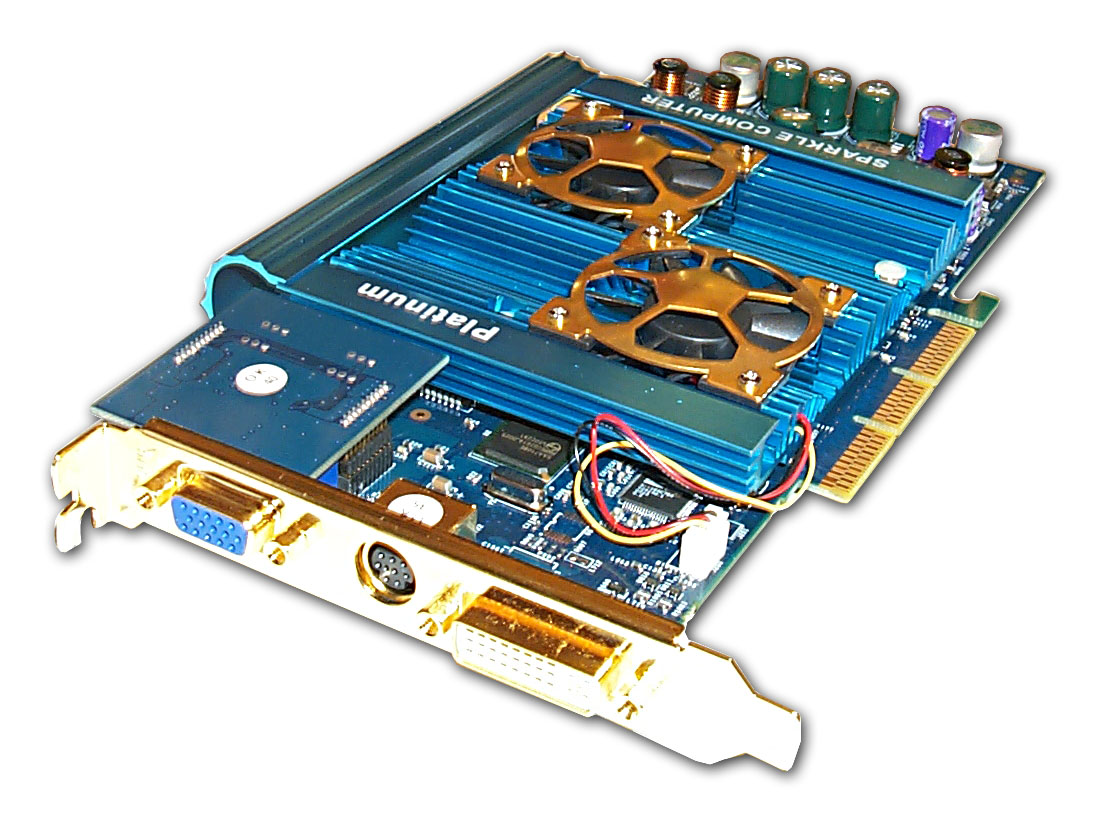

If your GPU has its additional six-pin and eight-pin power plugs on the rear of the card, leave room for hooking them up, as well. Next, check your graphics card's height. Manufacturers sometimes field their own card coolers that depart from the standard reference designs provided by both AMD and Nvidia. Make certain that if your chosen GPU has an elaborate cooler design, it's not so tricked out or bulky so as to prevent your case from closing.

Finally, there's the question of the power supply unit (PSU). The two most important factors to be aware of are the number of GPU-specific six-pin and eight-pin cables on your PSU and the amount of amperage your PSU can provide over the 12V rail. GPUs draw most of their power from this rail, and most modern systems, including those sold by OEMs like Dell, HP, Lenovo, and Asus, will also include at least one six-pin connector. Some systems' power supplies have both a six-pin and an eight-pin connector, this being a relatively common higher-end GPU configuration. Midrange and high-end graphics cards will require a six-pin cable, an eight-pin cable, or some combination of the two to provide working power to the card.

(Lower-end cards draw all the power they need from the PCI Express slot.) Nvidia and AMD both outline recommended power supplies for each of their graphics cards. These guidelines should be taken seriously, but they are just guidelines. Neither company knows if you plan to put its GPU in a system with a huge power-hungry CPU and tons of RAM, or if you're running a low-end dual-core PC. Their guidance, therefore, is generally conservative. If AMD or Nvidia says you need 500W to run a GPU, you should take that number seriously, but you don't need to buy an 800W supply just to make sure you have some headroom. If you're planning to buy a PC from an OEM and then upgrade it later, we can't stress the importance of the PSU enough. Next to needing a physical x16 PCI Express slot on the motherboard, you need a power supply capable of feeding your upgraded GPU.

Ports and Preferences There are three ports you are likely to find on the rear edge of a current graphics card: DVI, HDMI, and DisplayPort. Some systems and monitors still use DVI, but it's the oldest of the three standards and is being phased out in some of the very highest-end cards today. When it comes to HDMI vs. DisplayPort, there are some differences to be aware of. First, if you plan on using this system with a 4K display, either now or in the future, your GPU needs to at least support HDMI 2.0a or DisplayPort 1.2/1.2a. It's fine if the GPU supports anything above those labels, like HDMI 2.0b or DisplayPort 1.3, but that's the minimum you'll want for smooth 4K playback or gaming.

(The latest-gen cards from both makers should be fine on this score.) The one potential advantage of DisplayPort over HDMI is the ability to daisy-chain multiple displays together. Instead of needing three ports to connect three monitors, you have the option of connecting Monitor 1 to your PC, Monitor 2 to Monitor 1, and Monitor 3 to Monitor 2. There are stipulations in terms of DisplayPort version support and resolutions;. Looking Into the (Near) Future The overall pace of hardware improvements has slowed dramatically across the industry, but GPUs still evolve a fair bit year-on-year. If your goal is to make a high-end purchase of $500 or more and use the GPU for three to five years, you should steer toward the upper end of the market. If you prefer midrange upgrades with a shorter refresh cycle, you could spend $150 to $300 every 18 to 24 months.

Best Gfx Card For Mining

The other major question to ask is when and if you intend to adopt any of the cutting-edge display technologies that are steadily making their way into homes from showroom floors. Of all the near-term technologies coming to displays and graphics cards, 4K and (VR) have the greatest chance of catching on with mainstream consumers.

With 4K, the tradeoff is between graphical detail in any given title and the frame rate that title can maintain at 3,840-by-2,160 resolution. If you're willing to play at lower graphics quality presets (or if you're playing an older game), then you can get away with using a GPU in the $350 to $500 price range for decent 4K game play. If, on the other hand, you want an uncompromising experience with as few tradeoffs between detail levels and resolution as possible, there's only one GPU to consider: Nvidia's GeForce GTX 1080 Ti. AMD's GTX 1080 competitor is the, which was released in mid-2017. It matches the Nvidia in terms of pure graphics performance, but it requires significantly more power and has seen its prices soar due to the card's popularity with cryptocurrency miners. VR's requirements are slightly different. Both the and have an effective resolution across both eyes of 2,160 by 1,200.

That's significantly lower than 4K, and it's the reason why midrange GPUs like AMD's Radeon RX 580 or Nvidia's GeForce GTX 1060 can be used for VR. On the other hand, VR demands higher frame rates than conventional gaming.

Low frame rates in VR (anything below 90 frames per second is considered low) can result in a bad gaming experience. Higher-end GPUs in the $300-plus category are going to offer better VR experiences today and more longevity overall, but VR with current-generation can be sustained on a lower-end card than 4K. The Top Graphics Cards (for Now) The GPUs below span the spectrum of budget to high-end, representing a wide range of the best cards that are available now.

We'll update this story as the graphics card landscape changes, so check back often for the latest products and buying advice. Or, to get started with a new system, check out our reviews of the and, many of which offer the option of internal upgrades. Note that the popularity of sent the prices of many midrange and high-end graphics cards skyrocketing in 2017 and early 2018, warping the market. As the difficulty of mining certain popular currency types (such as Ethereum) with video cards has increased, demand for cards has slowed, and as we write this in mid-2018, we're seeing card prices return to a semblance of normalcy. Even so, card pricing in the last year or so has been enormously volatile, and street prices for some of the GPUs in this roundup could still be much higher than listed here. Pros: Sticks close to the performance of the GTX 1080 at a significantly lower price.

Often bests the pricier AMD Radeon RX Vega 64. Cons: Same 'Pascal' architecture as (and similar performance to) 10-Series cards that rolled out in mid-2016. Bottom Line: Nvidia's latest high-end card doesn't bring much in the way of new features or levels of performance. But it's nearly as powerful as a GTX 1080, at an enticing $449 price point that seems designed to to dig a heel into AMD's Vega-based alternatives.